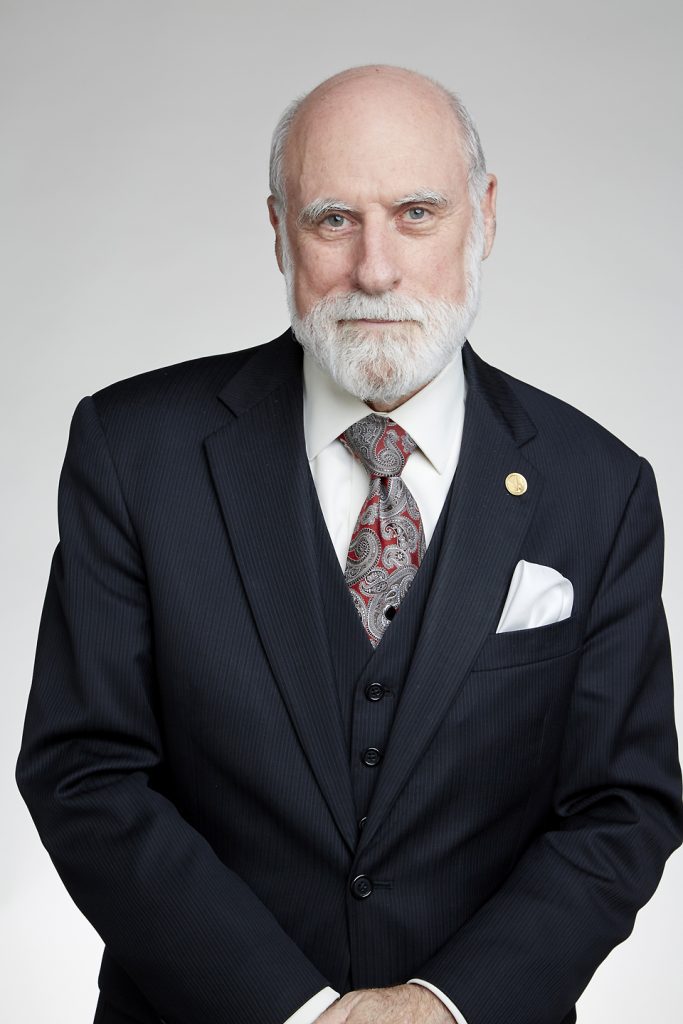

A special PTC conversation with Internet pioneer Dr. Vinton Cerf, Vice President and Chief Internet Evangelist at Google

How has the Internet developed, what can it do, and where is it going next? Does its past architecture somehow limit its future? Does such a vast global infrastructure carry inherent risks?

These are important questions for a technology that continues to reshape the world with massive socio-economic impact. Buried at the foundation of this global footprint is its key communications protocol—TCP/IP—that makes all wide-area network-to-network data transfer ultimately happen. Dr. Vinton Cerf—who, with Robert Kahn, originated the TCP/IP approach—gave his views on its evolution in a special interview for PTC.

Have map, no content

In the history of Internet development, TCP/IP is not only special because it is foundational; from its inception 45 years ago when Vinton Cerf and Robert Kahn envisaged new approaches, it also required a unique capability to think about the future.

In considering the future of internetworking between computers, the Internauts (as early researchers referred to themselves) were faced with a relatively blank canvas. They had, however, several years of experience with the ARPANET that explored the interconnection of heterogeneous computers on a homogeneous network. In that experiment, protocols were developed for remote time-shared computer use and electronic mail and file exchange. Those experiences informed the Internet design effort that included the interconnection of heterogeneous networks, the solution to which would have profound global implications.

“Our intent—which I think we satisfied in large measure—was to design an architecture that would accommodate an arbitrary number of networks [as well as] networks with different technologies or technologies yet to be invented,” says Dr. Cerf. “Optical fibre, for example, was really still six or seven years [from being adopted in telecom networks].”

He continues: “So we were anticipating new technologies would come along and we wanted the architecture to accommodate those so we could put Internet packets on top of virtually anything. And since we were aware that applications would come that we didn’t anticipate, we wanted to make sure that the work was not premised on any particular set of applications. [But more generally] we knew it had to deal with potentially real-time communications such as voice and video as well as data applications such as messaging.”

“[Because of this] we carefully took all knowledge of applications out of the basic protocol. Originally, a Transmission Control Protocol (TCP) was developed to handle all connection functions. Subsequently, addressing was split out of the TCP structure to form the TCP/IP suite with TCP layered on top of IP. Adjacent to TCP we put in the User Datagram Protocol (UDP) which allowed for real-time communication that was potentially lossy, such as speech.”

It remains a remarkable mental exercise, but these ideas proved pivotal because ultimately applications would indeed be remarkably wide-ranging in their connectivity demands, and this is still the case. He describes the resulting implementation as more future-capable than future-proof.

He recalls: “We knew even at that time that there were going to be radio technologies and Ethernet that had a lossy characteristic and the network didn’t guarantee to preserve the data. So, we had to build an end-to-end recovery mechanism and that’s what TCP/IP was all about.”

In fact, as he points out, many future applications were envisaged–in some form–early in the development. “We adapted the ARPANET protocols for email, file transfer and remote access to time-shared systems. We were experimenting with supporting streaming voice and video so we were anticipating these applications,” he points out.

Vinton Cerf, VP & Chief Internet Evangelist, Google, USA

A map for everyone

People and institutions found they could colour the blank map of the nascent Internet. And they could do it extremely rapidly. In little more than a decade, it transited from origins-based on a small number of institutional mainframe computer environments to a publicly-accessible world-wide technology. Well within a single working lifetime, as a new socio-economic map of the world, it has become accessible to nearly four billion people.

Along the way, TCP/IP spread and takeup was propelled by the advances –some seemingly tangential—that appeared, involving many more different Internet stakeholders and actors. Vinton Cerf himself suggests there were many such points: the adoption of TCP/IP by all ARPA-sponsored networks; its adoption and promotion by key federal institutions; and above all, the commercialization of Internet services which allow private sector participation in Internet development.

The early 1980s saw the rise of the IBM PC and a rapid growth in local area networking which further fed demand into wide area internetworking. On an infrastructural level, all-IP networks have replaced circuit-switched ones and broadband networks have appeared.

Users have seen enormously favourable connectivity economics as network costs have plummeted. The World Wide Web, the Mosaic browser, and rise of search engines have added still further impetus, he comments. The smartphone joined mobility to the world of IP in 2007, and the proliferation of social networks like Facebook and YouTube “have all dramatically altered the shape of the Internet in terms of what people do with it.”

In the background, however, still sits the TCP/IP map, as fundamental as it is unstoppably universal.

New maps for outer space?

In the meantime, he has been keen to see the limits of TCP/IP itself stretched further still and, as he has pointed out at past PTC events, wants network designers to “take advantage of different paradigms.”

Some of these new protocol concepts may lead to new maps. Communicating with deep-space probes headed through the solar system (and beyond) is one of these challenges. Alongside upgrading space communications for more effectiveness, the Interplanetary Internet also demonstrates an extreme connectivity and network management challenge, and the techniques developed could be useful in comparably demanding earth-based environments.

“We essentially had to redo the protocols to take advantage of [adding] memory in the network where we could store data that was in transit whilst waiting for a link to come up because orbital mechanics essentially limits the time when the radio connection is feasible,” he points out.

Outside earth orbit (where  conventional Internet connectivity is still available), communications are invariably subject to substantial delays and errors. Established IP practice generally works within terrestrial environments, with modest delays, and allowing end-to-end management. In space-borne applications, the network should allow for broken connectivity rather than re-transmitting end-to-end. As a result, developers have favoured store-and-forward techniques. The systems are built to retain the data in the nodes orbiting Mars (or elsewhere) and the data is forwarded when the links become available once more.

conventional Internet connectivity is still available), communications are invariably subject to substantial delays and errors. Established IP practice generally works within terrestrial environments, with modest delays, and allowing end-to-end management. In space-borne applications, the network should allow for broken connectivity rather than re-transmitting end-to-end. As a result, developers have favoured store-and-forward techniques. The systems are built to retain the data in the nodes orbiting Mars (or elsewhere) and the data is forwarded when the links become available once more.

Back on earth, ideas such as the potential use of broadcast IP technologies using one-to-many wireless communications represent one of other possibilities that Internet-related protocols may evolve.

Your map, your responsibility

In an ever-growing Internet culture, however, cutting-edge ideas may bring their own vulnerabilities. The Internet of Things, he predicts, may be one example. He says he “was very worried” about the risk of denial-of-service attacks that could be generated from abusive aggregated control of unsecured IoT nodes.

Quipping at a PTC presentation that this could lead to a scenario he called Attack of the Intelligent Refrigerators, he emphasises there are nevertheless serious risks. “The designs of the IoT systems have to take these vulnerabilities into account,” he says firmly. There are now signs that IoT protocols will indeed implement security from a node-based point of view, an approach he says is “completely correct,” but he continues to emphasise the need for adequate security architectures.

Network security is one clear risk, he says, but cyber-abuse will likely go much further. The same technology that allows people to productively share valuable content is also being used for abuse: bullying, false news, and malware, as well as denial of service.

But this means, he argues, there is an immediate and fundamental conflict between providing and using services, because half the global population still needs Internet access. “Now we have the problem of trying to simultaneously make the network affordable and accessible and at the same time improving trust in the network and its security.”

This is not an easy equation to solve. Purely technical and legal approaches may only go so far, he admits. Alongside more secure system development, he is particularly keen to see users more educated and discerning on what they are consuming, and is a strong advocate of getting people to think critically about what they see, say, and do in the Internet age.

People, he says, need to ask questions about the information they get: its source, underlying motivation, and the extent it can be corroborated. He acknowledges this is “hard work” in intellectual terms and may be asking a lot of the user. But, he argues, “the side effect of [any] unwillingness on the part of the user is they are naively exposed to false information that they take on board and believe is correct.”

Check the map

On a global scale, this abuse becomes an even larger problem, given that the abuser and abused may be in different jurisdictions or countries. More effective international agreements about what is and what is not acceptable with the means to prosecute those who harm through networks will help, he says, but he acknowledges there is “a lot of work to be done to formulate reasonable international standards for safety and security, to say nothing of privacy.”

However, he rejects any idea of centralised information governance or censorship. “I don’t think we should have a supreme arbiter that decides what is true and what is false,” he firmly states. “On the other hand, having an informed population—one educated to evaluate risks, as I’ve stated—is good for any country; and informed, in this case, includes defending yourself against potential misinformation and online hazards.”

The outlook is a challenging one, he admits. “My biggest concern is that the network will become less and less trusted unless we find ways of dealing with the problems that are arising.” He points out that fraud—as one example—is an existing fact of the offline world as well, so we should have experience of recognising the problems, but argues that “we have not necessarily figured how to respond…especially in the case of a multi-jurisdiction situation.”

NEW WORLDS, NEW MAPS

My own takeaway from this fascinating conversation is that the promotion of trust probably joins the pressing need to connect the rest of the global population as the challenges we now face on the current frontiers of the Internet.

But in this multi-decade narrative of past, present, and future, two themes stand out to me, at least. First, the Internet is a still-evolving phenomenon, its map changing daily before our eyes. Second, we—all of us—are actors in this evolution, whether we realise it or not. How tightly we bind it to ourselves and associate with it will certainly determine how what is probably the most remarkable development in recorded economic history is shaped in the years to come.

Dr. Vinton Cerf will be one of our highlight presenters at PTC’18 during the Wednesday Morning Keynotes on 24 January 2018.